80/20 MLOps

80/20 MLOps

You need to spin up an MLOps solution for a new team. Without deepy knowing the problem area, their budget or the software development cycle of this team, what solution could one recommend to have any team up and going quickly without spending needlessly and while maintaining evolveability of the platform down the line?

In this post, we’ll learn why a Kubeflow solution is the right starting point for 80% of ML platform use cases, why it saves on cost and how it allows teams to evolve their platform with their individual needs. Finally, we’ll setup a multi-user science experimentation and productionization platform in 20 minutes on both AWS and Google Cloud.

Alternatives

Fully managed MLOps platforms like Neptune.ai, Domino Data Labs, H2O.ai, or Platform As A Service (PaaS) options like Sagemaker Studio and AzureAI bring lower setup times, less maintenance, and conventinent integration. However, as we’ll explore below, a self-managed solution running on Kubernetes brings enormous flexibility and cost improvements.

Key Functional Requirements

Requirement 1: Iteration

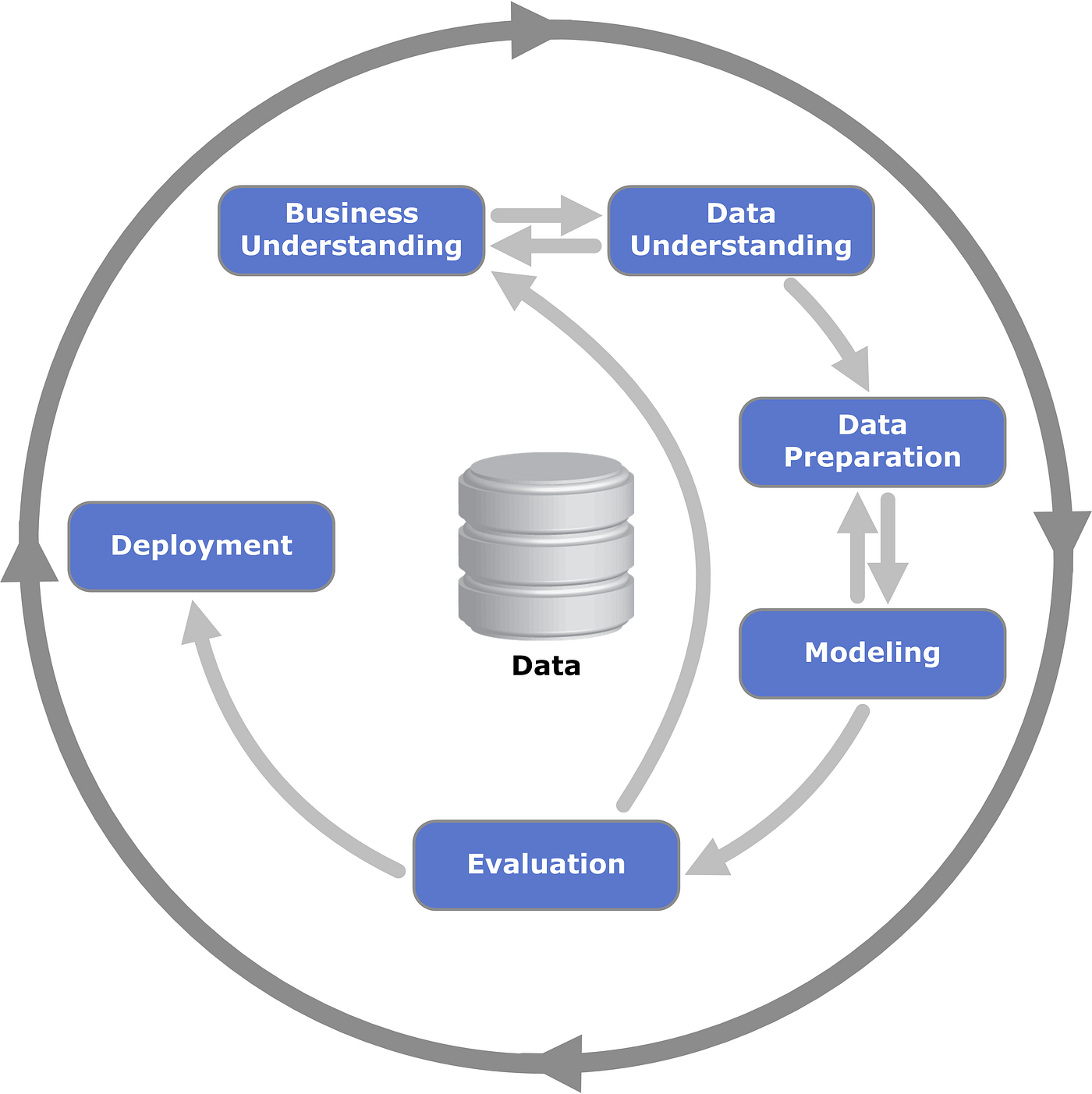

This graphic of the CRISP cycle has been around since before the invention of the transformer, but the core rules still apply. Whether you’re training a robot, ML is about experimentation, learning from the results, and iterating again as quickly and cheaply as possible.

But when machine learning teams scale, the quickness of iteration is stimied by reacting to the needs of individual models and problem areas. Some models require distributed GPU clusters, others can be experimented on single CPU. Some have hard-to-configure dependency environments which must be shared seamlessly between scientists. Model serving creates another large layer of complexity, with batch vs online serving, event-driven vs response-based, and CICD requirements.

Requirement 2: Unified Development

An ideal MLOps solution will allow teams to manage the varying components of the machine learning cycle without jumping between different interfaces and solutions. A balkanized MLOps solution suffers from disparate code repos within a project, out of sync CICD and deployment processes, and untestable (and often unsecure) handoffs across architectures. If our goal is grease the gears of the iteration cycle referenced above, disparate tools move us in the wrong direction.

The ideal solution unifies as much of the model development cycle as possible, including ETL, experimentation, model deployment, and monitoring. This fact has been recognized by industry leaders, as shown by the Sagemaker Unified Studio announcement in Dec 2024.

Requirement 3: Flexible Integration and Experimentation

ML use cases are as diverse as the different business cases and software environments they apply to. Even within a domain such as Natural Language Processing (NLP) there are infinte subdomains and unique data processing techniques, data stores and resource needs.

For these reasons, Kubernetes[3] stands out afor its universal flexibility. Pytorch model training, distributed Ray ETLs, and inference via vLLM can all work efficiently on Kubernetes. This is a key reason why Kubernetes is widely used across top tier tech companies and AI labs. Just prior to releasing ChatGPT, OpenAI described scaling a Kubernetes cluster to 75000 nodes.

Non-Functional Requirement: Low-Cost and Portability

Cost

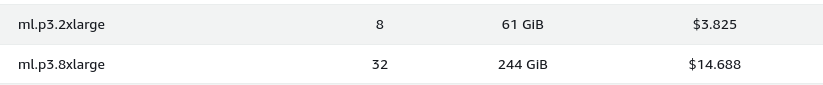

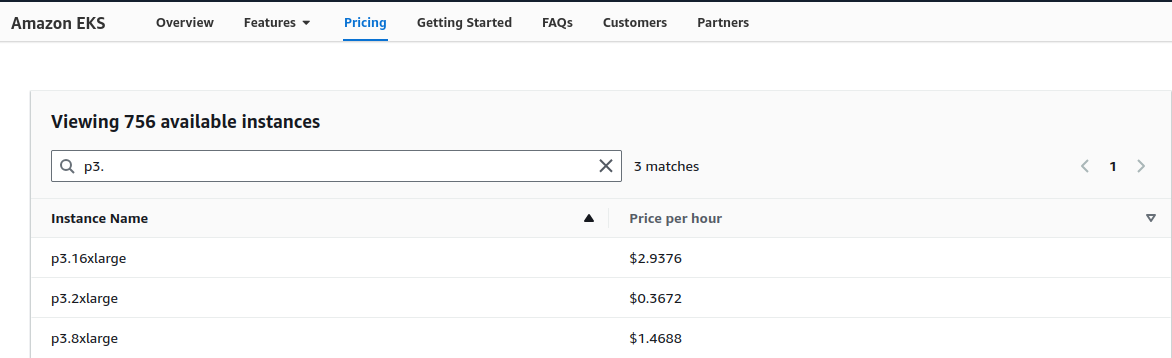

Besides flexibility, Kubernetes allows one to access a lower cost-per-compute compared to more managed or Platform As A Service alternatives. For example, training a model with a p3.2xlarge instance on AWS Sagemaker costs \$3.825 per hour at time of writing. By contrast, running the same instance in an Elastic Kubernetes Engine (EKS) cluster costs \$0.3672. The Sagemaker instance costs over 10 times as much!

Portability

In business, dependencies are risks. A dependency on AWS PaaS means you cannot be price sensitive without high switching costs. This is especially crucial when you consider the long-term lifecycle of your business. A decision to use Neptune today means a requirement that Neptune continue fitting your needs. Likewise, it also precludes switching to new cloud competitors who offer cheaper choices (without high switching costs).

An Example: Kubeflow on GCP

Picking An ML Kubernetes Framework

Kubeflow, Metaflow, Bodywork and Flyte are potential ML Frameworks which run on Kubernetes and have open source versions. Kubeflow has multiple advantages. First, it is well-supported as it initially was developed Google and is now maintained by the Cloud Native Computing Foundation. Another strength is its thoroughness and customization. Kubeflow offers specialized Kubernetes operators [4] for Notebooks, Workflows and Schedules, Training, Model Tuning, Serving, Model registry and a central monitoring dashboard. These custom operators also provide a customization in that they allow users to device which components are necessary at a given time. This makes Kubeflow adjustable to small projects or enterprise scale, as opposed to say Metaflow which is more of an all-in-one solution meant for a large scale project. Unlike Metaflow, it is also Cloud-agnostic.

1. Network & Subnets

Kubeflow components consist of pods (jobs, notebooks, pipeline steps) and services (microservices, load balancers) which need their own network and IP addresses. In the following terraform code, we create a network and a private subnet, with ip ranges split between pods and services. The subnet is private by default. In reality, we’ll want to add a NAT gateway to at least allow outgoing internet traffic to retreive models from Huggingface or get data. If serving a public-facing model, we’d likely want a seperate public subnet as well. For now, we’ll allow traffic out, but not in.

# Network for Kubeflow

resource "google_compute_network" "kubeflow_network" {

name = "${local.cluster_name}-network"

auto_create_subnetworks = false

}

# Subnet for Kubeflow

resource "google_compute_subnetwork" "kubeflow_subnet" {

name = "${local.cluster_name}-subnet"

ip_cidr_range = "10.0.0.0/16"

region = local.config.gcp_region

network = google_compute_network.kubeflow_network.self_link

secondary_ip_range {

range_name = "pods"

ip_cidr_range = "10.1.0.0/16"

}

secondary_ip_range {

range_name = "services"

ip_cidr_range = "10.2.0.0/16"

}

}

2. Kubernetes Cluster

We define our Kubernetes cluster in Google Kubernetes Engine (GKE). We remove the default node pool so that our node pool can be customized per workload.

# GKE Cluster

resource "google_container_cluster" "primary" {

name = local.cluster_name

location = local.gcp_region

remove_default_node_pool = true

initial_node_count = 1

# GKE Version

min_master_version = local.config.gke_version

# Workload Identity

workload_identity_config {

workload_pool = "${local.config.roject_id}.svc.id.goog"

}

# Network configuration for Kubeflow

network = google_compute_network.kubeflow_network.self_link

subnetwork = google_compute_subnetwork.kubeflow_subnet.self_link

# IP allocation for pods and services

ip_allocation_policy {

cluster_secondary_range_name = "pods"

services_secondary_range_name = "services"

}

# Enable network policy

network_policy {

enabled = true

}

# Addons

addons_config {

network_policy_config {

disabled = false

}

}

}

3. Service Accounts / Roles

Kubeflow nodes will need roles with permissions to execute in GCP cloud.

# Service Account for Kubeflow nodes

resource "google_service_account" "kubeflow_node_sa" {

account_id = "${local.config.cluster_name}-node-sa"

display_name = "Kubeflow Node Service Account"

}

resource "google_project_iam_member" "kubeflow_node_sa_roles" {

for_each = toset([

"roles/logging.logWriter",

"roles/monitoring.metricWriter",

"roles/monitoring.viewer",

"roles/storage.objectViewer"

])

project = local.config.project_id

role = each.value

member = "serviceAccount:${google_service_account.kubeflow_node_sa.email}"

}

4. Node Pools

We’ll include a node pool for gpu instances with 3 gpu nodes for our initial training run.

resource "google_container_node_pool" "kubeflow_gpu_pool" {

name = "${local.config.cluster_name}-gpu-pool"

location = local.config.gcp_region

cluster = google_container_cluster.primary.name

initial_node_count = 0

autoscaling {

min_node_count = 0

max_node_count = 3

}

node_config {

preemptible = true

machine_type = "n1-standard-4"

disk_size_gb = 100

disk_type = "pd-standard"

service_account = google_service_account.kubeflow_node_sa.email

oauth_scopes = [

"https://www.googleapis.com/auth/cloud-platform"

]

labels = {

purpose = "kubeflow-gpu"

}

metadata = {

disable-legacy-endpoints = "true"

}

# GPU configuration

guest_accelerator {

type = "nvidia-tesla-t4"

count = 3

}

}

management {

auto_repair = true

auto_upgrade = true

}

}

Running terraform plan will demonstrate the attributes of this stack. It should describe a simple cluster. Next, to deploy this cluster to our GCP project, we can run terraform apply. If this is a new project, we’ll need to enable the GKE API for the project and also link the project to a billing account.

Installing Kubeflow

Kubernetes Deployments require Objects [10] which include a spec which describe the desired state of your application. Kubernetes works to ensure your application matches the spec,and uses the status to describe how the application is actually running. That spec is defined via a config file (usually YAML) called a manifest. Kubernetes offers a myriad of ways to install packages. Individual Kubeflow components can be installed via the Kubeflow manifests repository. Kubeflow allows you to install the entire platform or individual components as needed.See https://github.com/jamesdvance/MLOPtionS/blob/main/kubeflow_gcp_tf/install-kubeflow_gke.sh for details.

What We Install

- cert-manager: Manages TLS certificates automatically for secure communication between services

- Istio: Service mesh providing traffic management, security, and observability for microservices communication

- Dex: Identity provider enabling OAuth2/OIDC authentication and user management

- OAuth2 Proxy: Authentication proxy that integrates with Dex to secure web applications

- Knative: Serverless platform for running event-driven workloads and auto-scaling applications

- Kubeflow Pipelines: Orchestrates machine learning workflows and experiments with versioning and reproducibility

- Katib: Hyperparameter tuning and neural architecture search system for automated ML optimization

- Central Dashboard: Web UI providing unified access to all Kubeflow components and user workspaces

- Jupyter Notebook Controller: Manages interactive development environments for data science workflows

- Training Operator: Handles distributed training jobs for frameworks like TensorFlow, PyTorch, and XGBoost

Logging In

Finally, we can access the Kubeflow interface via url. We can access an external IP for this Kubeflow application with the following cli command:

kubectl get svc istio-ingressgateway -n istio-system

After we can share this link with our coworkers or Kaggle team-mates and start collaborating!

Resources

[1]Spotify’s cluster [2] Combinator.ml [3] Kubernetes [4] Kubeflow Componenets [5] Metaflow [6] Bodywork [7] Kubernetes Manifest [8] OpenAI Scaling Kubernetes [9] OpenAI Case Study Kubernetes [10] Kubernetes Objects [11] Swiss Army Cube [12] Kubeflow on EKS

Enjoy Reading This Article?

Here are some more articles you might like to read next: